Note

Go to the end to download the full example code.

A simple Darcy-Flow dataset

In this example, we demonstrate how to use the small Darcy-Flow example we ship with the package

Import the library

We first import our neuralop library and required dependencies.

import matplotlib.pyplot as plt

from neuralop.data.datasets import load_darcy_flow_small

from neuralop.layers.embeddings import GridEmbedding2D

Load the dataset

Training samples are 16x16 and we load testing samples at both 16x16 and 32x32 (to test resolution invariance).

train_loader, test_loaders, data_processor = load_darcy_flow_small(

n_train=100, batch_size=4,

test_resolutions=[16, 32], n_tests=[50, 50], test_batch_sizes=[4, 2],

)

train_dataset = train_loader.dataset

/home/runner/work/neuraloperator/neuraloperator/neuralop/data/datasets/pt_dataset.py:93: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

data = torch.load(

Loading test db for resolution 16 with 50 samples

/home/runner/work/neuraloperator/neuraloperator/neuralop/data/datasets/pt_dataset.py:172: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

data = torch.load(Path(root_dir).joinpath(f"{dataset_name}_test_{res}.pt").as_posix())

Loading test db for resolution 32 with 50 samples

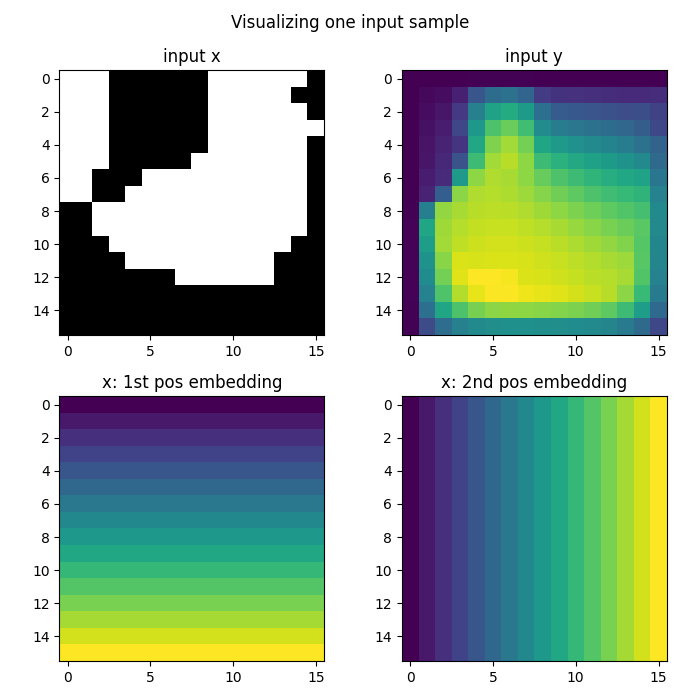

Visualizing the data

for res, test_loader in test_loaders.items():

print(res)

# Get first batch

batch = next(iter(test_loader))

x = batch['x']

y = batch['y']

print(f'Testing samples for res {res} have shape {x.shape[1:]}')

data = train_dataset[0]

x = data['x']

y = data['y']

print(f'Training samples have shape {x.shape[1:]}')

# Which sample to view

index = 0

data = train_dataset[index]

data = data_processor.preprocess(data, batched=False)

# The first step of the default FNO model is a grid-based

# positional embedding. We will add it manually here to

# visualize the channels appended by this embedding.

positional_embedding = GridEmbedding2D(in_channels=1)

# at train time, data will be collated with a batch dim.

# we create a batch dim to pass into the embedding, then re-squeeze

x = positional_embedding(data['x'].unsqueeze(0)).squeeze(0)

y = data['y']

fig = plt.figure(figsize=(7, 7))

ax = fig.add_subplot(2, 2, 1)

ax.imshow(x[0], cmap='gray')

ax.set_title('input x')

ax = fig.add_subplot(2, 2, 2)

ax.imshow(y.squeeze())

ax.set_title('input y')

ax = fig.add_subplot(2, 2, 3)

ax.imshow(x[1])

ax.set_title('x: 1st pos embedding')

ax = fig.add_subplot(2, 2, 4)

ax.imshow(x[2])

ax.set_title('x: 2nd pos embedding')

fig.suptitle('Visualizing one input sample', y=0.98)

plt.tight_layout()

fig.show()

16

Testing samples for res 16 have shape torch.Size([1, 16, 16])

32

Testing samples for res 32 have shape torch.Size([1, 32, 32])

Training samples have shape torch.Size([16, 16])

Total running time of the script: (0 minutes 0.331 seconds)