Note

Go to the end to download the full example code.

A simple Darcy-Flow dataset

An introduction to the small Darcy-Flow example dataset we ship with the package.

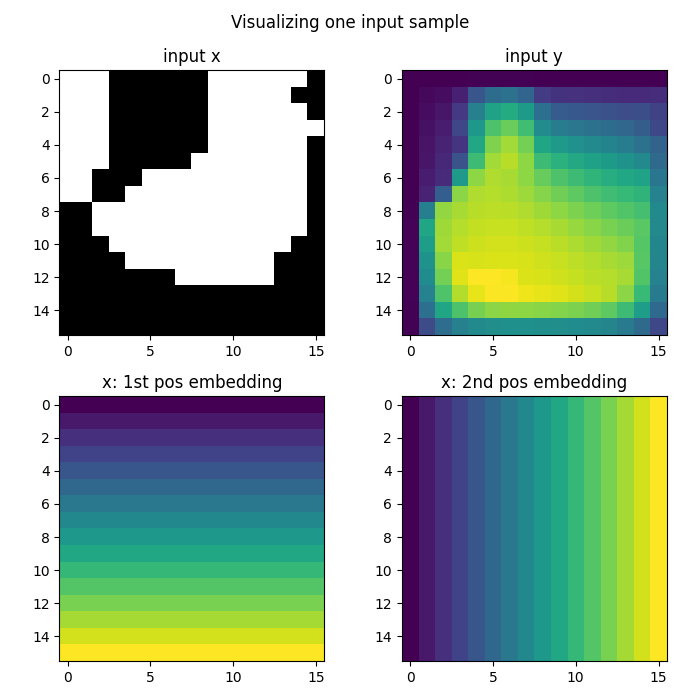

The Darcy-Flow problem is a fundamental partial differential equation (PDE) in fluid mechanics that describes the flow of a fluid through a porous medium. In this tutorial, we explore the dataset structure and visualize how the data is processed for neural operator training.

Import the library

We first import our neuralop library and required dependencies.

import matplotlib.pyplot as plt

from neuralop.data.datasets import load_darcy_flow_small

from neuralop.layers.embeddings import GridEmbedding2D

Load the dataset

Training samples are 16x16 and we load testing samples at both 16x16 and 32x32 (to test resolution invariance).

train_loader, test_loaders, data_processor = load_darcy_flow_small(

n_train=20,

batch_size=4,

test_resolutions=[16, 32],

n_tests=[10, 10],

test_batch_sizes=[4, 2],

)

train_dataset = train_loader.dataset

Download in progress: 0.02%

Download in progress: 0.03%

Download in progress: 0.05%

Download in progress: 0.07%

Download in progress: 0.09%

Download in progress: 0.10%

Download in progress: 0.12%

Download in progress: 0.14%

Download in progress: 0.16%

Download in progress: 0.17%

Download in progress: 0.19%

Download in progress: 0.21%

Download in progress: 0.23%

Download in progress: 0.24%

Download in progress: 0.26%

Download in progress: 0.28%

Download in progress: 0.30%

Download in progress: 0.31%

Download in progress: 0.33%

Download in progress: 0.35%

Download in progress: 0.37%

Download in progress: 0.38%

Download in progress: 0.40%

Download in progress: 0.42%

Download in progress: 0.44%

Download in progress: 0.45%

Download in progress: 0.47%

Download in progress: 0.49%

Download in progress: 0.51%

Download in progress: 0.52%

Download in progress: 0.54%

Download in progress: 0.56%

Download in progress: 0.58%

Download in progress: 0.59%

Download in progress: 0.61%

Download in progress: 0.63%

Download in progress: 0.65%

Download in progress: 0.66%

Download in progress: 0.68%

Download in progress: 0.70%

Download in progress: 0.72%

Download in progress: 0.73%

Download in progress: 0.75%

Download in progress: 0.77%

Download in progress: 0.79%

Download in progress: 0.80%

Download in progress: 0.82%

Download in progress: 0.84%

Download in progress: 0.86%

Download in progress: 0.87%

Download in progress: 0.89%

Download in progress: 0.91%

Download in progress: 0.93%

Download in progress: 0.94%

Download in progress: 0.96%

Download in progress: 0.98%

Download in progress: 1.00%

Download in progress: 1.01%

Download in progress: 1.03%

Download in progress: 1.05%

Download in progress: 1.07%

Download in progress: 1.08%

Download in progress: 1.10%

Download in progress: 1.12%

Download in progress: 1.14%

Download in progress: 1.15%

Download in progress: 1.17%

Download in progress: 1.19%

Download in progress: 1.21%

Download in progress: 1.22%

Download in progress: 1.24%

Download in progress: 1.26%

Download in progress: 1.28%

Download in progress: 1.29%

Download in progress: 1.31%

Download in progress: 1.33%

Download in progress: 1.35%

Download in progress: 1.36%

Download in progress: 1.38%

Download in progress: 1.40%

Download in progress: 1.42%

Download in progress: 1.43%

Download in progress: 1.45%

Download in progress: 1.47%

Download in progress: 1.49%

Download in progress: 1.50%

Download in progress: 1.52%

Download in progress: 1.54%

Download in progress: 1.56%

Download in progress: 1.57%

Download in progress: 1.59%

Download in progress: 1.61%

Download in progress: 1.63%

Download in progress: 1.64%

Download in progress: 1.66%

Download in progress: 1.68%

Download in progress: 1.70%

Download in progress: 1.71%

Download in progress: 1.73%

Download in progress: 1.75%

Download in progress: 1.77%

Download in progress: 1.78%

Download in progress: 1.80%

Download in progress: 1.82%

Download in progress: 1.84%

Download in progress: 1.85%

Download in progress: 1.87%

Download in progress: 1.89%

Download in progress: 1.91%

Download in progress: 1.92%

Download in progress: 1.94%

Download in progress: 1.96%

Download in progress: 1.98%

Download in progress: 1.99%

Download in progress: 2.01%

Download in progress: 2.03%

Download in progress: 2.05%

Download in progress: 2.06%

Download in progress: 2.08%

Download in progress: 2.10%

Download in progress: 2.12%

Download in progress: 2.13%

Download in progress: 2.15%

Download in progress: 2.17%

Download in progress: 2.19%

Download in progress: 2.20%

Download in progress: 2.22%

Download in progress: 2.24%

Download in progress: 2.26%

Download in progress: 2.27%

Download in progress: 2.29%

Download in progress: 2.31%

Download in progress: 2.33%

Download in progress: 2.34%

Download in progress: 2.36%

Download in progress: 2.38%

Download in progress: 2.39%

Download in progress: 2.41%

Download in progress: 2.43%

Download in progress: 2.45%

Download in progress: 2.46%

Download in progress: 2.48%

Download in progress: 2.50%

Download in progress: 2.52%

Download in progress: 2.53%

Download in progress: 2.55%

Download in progress: 2.57%

Download in progress: 2.59%

Download in progress: 2.60%

Download in progress: 2.62%

Download in progress: 2.64%

Download in progress: 2.66%

Download in progress: 2.67%

Download in progress: 2.69%

Download in progress: 2.71%

Download in progress: 2.73%

Download in progress: 2.74%

Download in progress: 2.76%

Download in progress: 2.78%

Download in progress: 2.80%

Download in progress: 2.81%

Download in progress: 2.83%

Download in progress: 2.85%

Download in progress: 2.87%

Download in progress: 2.88%

Download in progress: 2.90%

Download in progress: 2.92%

Download in progress: 2.94%

Download in progress: 2.95%

Download in progress: 2.97%

Download in progress: 2.99%

Download in progress: 3.01%

Download in progress: 3.02%

Download in progress: 3.04%

Download in progress: 3.06%

Download in progress: 3.08%

Download in progress: 3.09%

Download in progress: 3.11%

Download in progress: 3.13%

Download in progress: 3.15%

Download in progress: 3.16%

Download in progress: 3.18%

Download in progress: 3.20%

Download in progress: 3.22%

Download in progress: 3.23%

Download in progress: 3.25%

Download in progress: 3.27%

Download in progress: 3.29%

Download in progress: 3.30%

Download in progress: 3.32%

Download in progress: 3.34%

Download in progress: 3.36%

Download in progress: 3.37%

Download in progress: 3.39%

Download in progress: 3.41%

Download in progress: 3.43%

Download in progress: 3.44%

Download in progress: 3.46%

Download in progress: 3.48%

Download in progress: 3.50%

Download in progress: 3.51%

Download in progress: 3.53%

Download in progress: 3.55%

Download in progress: 3.57%

Download in progress: 3.58%

Download in progress: 3.60%

Download in progress: 3.62%

Download in progress: 3.64%

Download in progress: 3.65%

Download in progress: 3.67%

Download in progress: 3.69%

Download in progress: 3.71%

Download in progress: 3.72%

Download in progress: 3.74%

Download in progress: 3.76%

Download in progress: 3.78%

Download in progress: 3.79%

Download in progress: 3.81%

Download in progress: 3.83%

Download in progress: 3.85%

Download in progress: 3.86%

Download in progress: 3.88%

Download in progress: 3.90%

Download in progress: 3.92%

Download in progress: 3.93%

Download in progress: 3.95%

Download in progress: 3.97%

Download in progress: 3.99%

Download in progress: 4.00%

Download in progress: 4.02%

Download in progress: 4.04%

Download in progress: 4.06%

Download in progress: 4.07%

Download in progress: 4.09%

Download in progress: 4.11%

Download in progress: 4.13%

Download in progress: 4.14%

Download in progress: 4.16%

Download in progress: 4.18%

Download in progress: 4.20%

Download in progress: 4.21%

Download in progress: 4.23%

Download in progress: 4.25%

Download in progress: 4.27%

Download in progress: 4.28%

Download in progress: 4.30%

Download in progress: 4.32%

Download in progress: 4.34%

Download in progress: 4.35%

Download in progress: 4.37%

Download in progress: 4.39%

Download in progress: 4.41%

Download in progress: 4.42%

Download in progress: 4.44%

Download in progress: 4.46%

Download in progress: 4.48%

Download in progress: 4.49%

Download in progress: 4.51%

Download in progress: 4.53%

Download in progress: 4.55%

Download in progress: 4.56%

Download in progress: 4.58%

Download in progress: 4.60%

Download in progress: 4.62%

Download in progress: 4.63%

Download in progress: 4.65%

Download in progress: 4.67%

Download in progress: 4.69%

Download in progress: 4.70%

Download in progress: 4.72%

Download in progress: 4.74%

Download in progress: 4.75%

Download in progress: 4.77%

Download in progress: 4.79%

Download in progress: 4.81%

Download in progress: 4.82%

Download in progress: 4.84%

Download in progress: 4.86%

Download in progress: 4.88%

Download in progress: 4.89%

Download in progress: 4.91%

Download in progress: 4.93%

Download in progress: 4.95%

Download in progress: 4.96%

Download in progress: 4.98%

Download in progress: 5.00%

Download in progress: 5.02%

Download in progress: 5.03%

Download in progress: 5.05%

Download in progress: 5.07%

Download in progress: 5.09%

Download in progress: 5.10%

Download in progress: 5.12%

Download in progress: 5.14%

Download in progress: 5.16%

Download in progress: 5.17%

Download in progress: 5.19%

Download in progress: 5.21%

Download in progress: 5.23%

Download in progress: 5.24%

Download in progress: 5.26%

Download in progress: 5.28%

Download in progress: 5.30%

Download in progress: 5.31%

Download in progress: 5.33%

Download in progress: 5.35%

Download in progress: 5.37%

Download in progress: 5.38%

Download in progress: 5.40%

Download in progress: 5.42%

Download in progress: 5.44%

Download in progress: 5.45%

Download in progress: 5.47%

Download in progress: 5.49%

Download in progress: 5.51%

Download in progress: 5.52%

Download in progress: 5.54%

Download in progress: 5.56%

Download in progress: 5.58%

Download in progress: 5.59%

Download in progress: 5.61%

Download in progress: 5.63%

Download in progress: 5.65%

Download in progress: 5.66%

Download in progress: 5.68%

Download in progress: 5.70%

Download in progress: 5.72%

Download in progress: 5.73%

Download in progress: 5.75%

Download in progress: 5.77%

Download in progress: 5.79%

Download in progress: 5.80%

Download in progress: 5.82%

Download in progress: 5.84%

Download in progress: 5.86%

Download in progress: 5.87%

Download in progress: 5.89%

Download in progress: 5.91%

Download in progress: 5.93%

Download in progress: 5.94%

Download in progress: 5.96%

Download in progress: 5.98%

Download in progress: 6.00%

Download in progress: 6.01%

Download in progress: 6.03%

Download in progress: 6.05%

Download in progress: 6.07%

Download in progress: 6.08%

Download in progress: 6.10%

Download in progress: 6.12%

Download in progress: 6.14%

Download in progress: 6.15%

Download in progress: 6.17%

Download in progress: 6.19%

Download in progress: 6.21%

Download in progress: 6.22%

Download in progress: 6.24%

Download in progress: 6.26%

Download in progress: 6.28%

Download in progress: 6.29%

Download in progress: 6.31%

Download in progress: 6.33%

Download in progress: 6.35%

Download in progress: 6.36%

Download in progress: 6.38%

Download in progress: 6.40%

Download in progress: 6.42%

Download in progress: 6.43%

Download in progress: 6.45%

Download in progress: 6.47%

Download in progress: 6.49%

Download in progress: 6.50%

Download in progress: 6.52%

Download in progress: 6.54%

Download in progress: 6.56%

Download in progress: 6.57%

Download in progress: 6.59%

Download in progress: 6.61%

Download in progress: 6.63%

Download in progress: 6.64%

Download in progress: 6.66%

Download in progress: 6.68%

Download in progress: 6.70%

Download in progress: 6.71%

Download in progress: 6.73%

Download in progress: 6.75%

Download in progress: 6.77%

Download in progress: 6.78%

Download in progress: 6.80%

Download in progress: 6.82%

Download in progress: 6.84%

Download in progress: 6.85%

Download in progress: 6.87%

Download in progress: 6.89%

Download in progress: 6.91%

Download in progress: 6.92%

Download in progress: 6.94%

Download in progress: 6.96%

Download in progress: 6.98%

Download in progress: 6.99%

Download in progress: 7.01%

Download in progress: 7.03%

Download in progress: 7.05%

Download in progress: 7.06%

Download in progress: 7.08%

Download in progress: 7.10%

Download in progress: 7.11%

Download in progress: 7.13%

Download in progress: 7.15%

Download in progress: 7.17%

Download in progress: 7.18%

Download in progress: 7.20%

Download in progress: 7.22%

Download in progress: 7.24%

Download in progress: 7.25%

Download in progress: 7.27%

Download in progress: 7.29%

Download in progress: 7.31%

Download in progress: 7.32%

Download in progress: 7.34%

Download in progress: 7.36%

Download in progress: 7.38%

Download in progress: 7.39%

Download in progress: 7.41%

Download in progress: 7.43%

Download in progress: 7.45%

Download in progress: 7.46%

Download in progress: 7.48%

Download in progress: 7.50%

Download in progress: 7.52%

Download in progress: 7.53%

Download in progress: 7.55%

Download in progress: 7.57%

Download in progress: 7.59%

Download in progress: 7.60%

Download in progress: 7.62%

Download in progress: 7.64%

Download in progress: 7.66%

Download in progress: 7.67%

Download in progress: 7.69%

Download in progress: 7.71%

Download in progress: 7.73%

Download in progress: 7.74%

Download in progress: 7.76%

Download in progress: 7.78%

Download in progress: 7.80%

Download in progress: 7.81%

Download in progress: 7.83%

Download in progress: 7.85%

Download in progress: 7.87%

Download in progress: 7.88%

Download in progress: 7.90%

Download in progress: 7.92%

Download in progress: 7.94%

Download in progress: 7.95%

Download in progress: 7.97%

Download in progress: 7.99%

Download in progress: 8.01%

Download in progress: 8.02%

Download in progress: 8.04%

Download in progress: 8.06%

Download in progress: 8.08%

Download in progress: 8.09%

Download in progress: 8.11%

Download in progress: 8.13%

Download in progress: 8.15%

Download in progress: 8.16%

Download in progress: 8.18%

Download in progress: 8.20%

Download in progress: 8.22%

Download in progress: 8.23%

Download in progress: 8.25%

Download in progress: 8.27%

Download in progress: 8.29%

Download in progress: 8.30%

Download in progress: 8.32%

Download in progress: 8.34%

Download in progress: 8.36%

Download in progress: 8.37%

Download in progress: 8.39%

Download in progress: 8.41%

Download in progress: 8.43%

Download in progress: 8.44%

Download in progress: 8.46%

Download in progress: 8.48%

Download in progress: 8.50%

Download in progress: 8.51%

Download in progress: 8.53%

Download in progress: 8.55%

Download in progress: 8.57%

Download in progress: 8.58%

Download in progress: 8.60%

Download in progress: 8.62%

Download in progress: 8.64%

Download in progress: 8.65%

Download in progress: 8.67%

Download in progress: 8.69%

Download in progress: 8.71%

Download in progress: 8.72%

Download in progress: 8.74%

Download in progress: 8.76%

Download in progress: 8.78%

Download in progress: 8.79%

Download in progress: 8.81%

Download in progress: 8.83%

Download in progress: 8.85%

Download in progress: 8.86%

Download in progress: 8.88%

Download in progress: 8.90%

Download in progress: 8.92%

Download in progress: 8.93%

Download in progress: 8.95%

Download in progress: 8.97%

Download in progress: 8.99%

Download in progress: 9.00%

Download in progress: 9.02%

Download in progress: 9.04%

Download in progress: 9.06%

Download in progress: 9.07%

Download in progress: 9.09%

Download in progress: 9.11%

Download in progress: 9.13%

Download in progress: 9.14%

Download in progress: 9.16%

Download in progress: 9.18%

Download in progress: 9.20%

Download in progress: 9.21%

Download in progress: 9.23%

Download in progress: 9.25%

Download in progress: 9.27%

Download in progress: 9.28%

Download in progress: 9.30%

Download in progress: 9.32%

Download in progress: 9.34%

Download in progress: 9.35%

Download in progress: 9.37%

Download in progress: 9.39%

Download in progress: 9.41%

Download in progress: 9.42%

Download in progress: 9.44%

Download in progress: 9.46%

Download in progress: 9.47%

Download in progress: 9.49%

Download in progress: 9.51%

Download in progress: 9.53%

Download in progress: 9.54%

Download in progress: 9.56%

Download in progress: 9.58%

Download in progress: 9.60%

Download in progress: 9.61%

Download in progress: 9.63%

Download in progress: 9.65%

Download in progress: 9.67%

Download in progress: 9.68%

Download in progress: 9.70%

Download in progress: 9.72%

Download in progress: 9.74%

Download in progress: 9.75%

Download in progress: 9.77%

Download in progress: 9.79%

Download in progress: 9.81%

Download in progress: 9.82%

Download in progress: 9.84%

Download in progress: 9.86%

Download in progress: 9.88%

Download in progress: 9.89%

Download in progress: 9.91%

Download in progress: 9.93%

Download in progress: 9.95%

Download in progress: 9.96%

Download in progress: 9.98%

Download in progress: 10.00%

Download in progress: 10.02%

Download in progress: 10.03%

Download in progress: 10.05%

Download in progress: 10.07%

Download in progress: 10.09%

Download in progress: 10.10%

Download in progress: 10.12%

Download in progress: 10.14%

Download in progress: 10.16%

Download in progress: 10.17%

Download in progress: 10.19%

Download in progress: 10.21%

Download in progress: 10.23%

Download in progress: 10.24%

Download in progress: 10.26%

Download in progress: 10.28%

Download in progress: 10.30%

Download in progress: 10.31%

Download in progress: 10.33%

Download in progress: 10.35%

Download in progress: 10.37%

Download in progress: 10.38%

Download in progress: 10.40%

Download in progress: 10.42%

Download in progress: 10.44%

Download in progress: 10.45%

Download in progress: 10.47%

Download in progress: 10.49%

Download in progress: 10.51%

Download in progress: 10.52%

Download in progress: 10.54%

Download in progress: 10.56%

Download in progress: 10.58%

Download in progress: 10.59%

Download in progress: 10.61%

Download in progress: 10.63%

Download in progress: 10.65%

Download in progress: 10.66%

Download in progress: 10.68%

Download in progress: 10.70%

Download in progress: 10.72%

Download in progress: 10.73%

Download in progress: 10.75%

Download in progress: 10.77%

Download in progress: 10.79%

Download in progress: 10.80%

Download in progress: 10.82%

Download in progress: 10.84%

Download in progress: 10.86%

Download in progress: 10.87%

Download in progress: 10.89%

Download in progress: 10.91%

Download in progress: 10.93%

Download in progress: 10.94%

Download in progress: 10.96%

Download in progress: 10.98%

Download in progress: 11.00%

Download in progress: 11.01%

Download in progress: 11.03%

Download in progress: 11.05%

Download in progress: 11.07%

Download in progress: 11.08%

Download in progress: 11.10%

Download in progress: 11.12%

Download in progress: 11.14%

Download in progress: 11.15%

Download in progress: 11.17%

Download in progress: 11.19%

Download in progress: 11.21%

Download in progress: 11.22%

Download in progress: 11.24%

Download in progress: 11.26%

Download in progress: 11.28%

Download in progress: 11.29%

Download in progress: 11.31%

Download in progress: 11.33%

Download in progress: 11.35%

Download in progress: 11.36%

Download in progress: 11.38%

Download in progress: 11.40%

Download in progress: 11.42%

Download in progress: 11.43%

Download in progress: 11.45%

Download in progress: 11.47%

Download in progress: 11.49%

Download in progress: 11.50%

Download in progress: 11.52%

Download in progress: 11.54%

Download in progress: 11.56%

Download in progress: 11.57%

Download in progress: 11.59%

Download in progress: 11.61%

Download in progress: 11.63%

Download in progress: 11.64%

Download in progress: 11.66%

Download in progress: 11.68%

Download in progress: 11.70%

Download in progress: 11.71%

Download in progress: 11.73%

Download in progress: 11.75%

Download in progress: 11.77%

Download in progress: 11.78%

Download in progress: 11.80%

Download in progress: 11.82%

Download in progress: 11.83%

Download in progress: 11.85%

Download in progress: 11.87%

Download in progress: 11.89%

Download in progress: 11.90%

Download in progress: 11.92%

Download in progress: 11.94%

Download in progress: 11.96%

Download in progress: 11.97%

Download in progress: 11.99%

Download in progress: 12.01%

Download in progress: 12.03%

Download in progress: 12.04%

Download in progress: 12.06%

Download in progress: 12.08%

Download in progress: 12.10%

Download in progress: 12.11%

Download in progress: 12.13%

Download in progress: 12.15%

Download in progress: 12.17%

Download in progress: 12.18%

Download in progress: 12.20%

Download in progress: 12.22%

Download in progress: 12.24%

Download in progress: 12.25%

Download in progress: 12.27%

Download in progress: 12.29%

Download in progress: 12.31%

Download in progress: 12.32%

Download in progress: 12.34%

Download in progress: 12.36%

Download in progress: 12.38%

Download in progress: 12.39%

Download in progress: 12.41%

Download in progress: 12.43%

Download in progress: 12.45%

Download in progress: 12.46%

Download in progress: 12.48%

Download in progress: 12.50%

Download in progress: 12.52%

Download in progress: 12.53%

Download in progress: 12.55%

Download in progress: 12.57%

Download in progress: 12.59%

Download in progress: 12.60%

Download in progress: 12.62%

Download in progress: 12.64%

Download in progress: 12.66%

Download in progress: 12.67%

Download in progress: 12.69%

Download in progress: 12.71%

Download in progress: 12.73%

Download in progress: 12.74%

Download in progress: 12.76%

Download in progress: 12.78%

Download in progress: 12.80%

Download in progress: 12.81%

Download in progress: 12.83%

Download in progress: 12.85%

Download in progress: 12.87%

Download in progress: 12.88%

Download in progress: 12.90%

Download in progress: 12.92%

Download in progress: 12.94%

Download in progress: 12.95%

Download in progress: 12.97%

Download in progress: 12.99%

Download in progress: 13.01%

Download in progress: 13.02%

Download in progress: 13.04%

Download in progress: 13.06%

Download in progress: 13.08%

Download in progress: 13.09%

Download in progress: 13.11%

Download in progress: 13.13%

Download in progress: 13.15%

Download in progress: 13.16%

Download in progress: 13.18%

Download in progress: 13.20%

Download in progress: 13.22%

Download in progress: 13.23%

Download in progress: 13.25%

Download in progress: 13.27%

Download in progress: 13.29%

Download in progress: 13.30%

Download in progress: 13.32%

Download in progress: 13.34%

Download in progress: 13.36%

Download in progress: 13.37%

Download in progress: 13.39%

Download in progress: 13.41%

Download in progress: 13.43%

Download in progress: 13.44%

Download in progress: 13.46%

Download in progress: 13.48%

Download in progress: 13.50%

Download in progress: 13.51%

Download in progress: 13.53%

Download in progress: 13.55%

Download in progress: 13.57%

Download in progress: 13.58%

Download in progress: 13.60%

Download in progress: 13.62%

Download in progress: 13.64%

Download in progress: 13.65%

Download in progress: 13.67%

Download in progress: 13.69%

Download in progress: 13.71%

Download in progress: 13.72%

Download in progress: 13.74%

Download in progress: 13.76%

Download in progress: 13.78%

Download in progress: 13.79%

Download in progress: 13.81%

Download in progress: 13.83%

Download in progress: 13.85%

Download in progress: 13.86%

Download in progress: 13.88%

Download in progress: 13.90%

Download in progress: 13.92%

Download in progress: 13.93%

Download in progress: 13.95%

Download in progress: 13.97%

Download in progress: 13.99%

Download in progress: 14.00%

Download in progress: 14.02%

Download in progress: 14.04%

Download in progress: 14.06%

Download in progress: 14.07%

Download in progress: 14.09%

Download in progress: 14.11%

Download in progress: 14.13%

Download in progress: 14.14%

Download in progress: 14.16%

Download in progress: 14.18%

Download in progress: 14.20%

Download in progress: 14.21%

Download in progress: 14.23%

Download in progress: 14.25%

Download in progress: 14.26%

Download in progress: 14.28%

Download in progress: 14.30%

Download in progress: 14.32%

Download in progress: 14.33%

Download in progress: 14.35%

Download in progress: 14.37%

Download in progress: 14.39%

Download in progress: 14.40%

Download in progress: 14.42%

Download in progress: 14.44%

Download in progress: 14.46%

Download in progress: 14.47%

Download in progress: 14.49%

Download in progress: 14.51%

Download in progress: 14.53%

Download in progress: 14.54%

Download in progress: 14.56%

Download in progress: 14.58%

Download in progress: 14.60%

Download in progress: 14.61%

Download in progress: 14.63%

Download in progress: 14.65%

Download in progress: 14.67%

Download in progress: 14.68%

Download in progress: 14.70%

Download in progress: 14.72%

Download in progress: 14.74%

Download in progress: 14.75%

Download in progress: 14.77%

Download in progress: 14.79%

Download in progress: 14.81%

Download in progress: 14.82%

Download in progress: 14.84%

Download in progress: 14.86%

Download in progress: 14.88%

Download in progress: 14.89%

Download in progress: 14.91%

Download in progress: 14.93%

Download in progress: 14.95%

Download in progress: 14.96%

Download in progress: 14.98%

Download in progress: 15.00%

Download in progress: 15.02%

Download in progress: 15.03%

Download in progress: 15.05%

Download in progress: 15.07%

Download in progress: 15.09%

Download in progress: 15.10%

Download in progress: 15.12%

Download in progress: 15.14%

Download in progress: 15.16%

Download in progress: 15.17%

Download in progress: 15.19%

Download in progress: 15.21%

Download in progress: 15.23%

Download in progress: 15.24%

Download in progress: 15.26%

Download in progress: 15.28%

Download in progress: 15.30%

Download in progress: 15.31%

Download in progress: 15.33%

Download in progress: 15.35%

Download in progress: 15.37%

Download in progress: 15.38%

Download in progress: 15.40%

Download in progress: 15.42%

Download in progress: 15.44%

Download in progress: 15.45%

Download in progress: 15.47%

Download in progress: 15.49%

Download in progress: 15.51%

Download in progress: 15.52%

Download in progress: 15.54%

Download in progress: 15.56%

Download in progress: 15.58%

Download in progress: 15.59%

Download in progress: 15.61%

Download in progress: 15.63%

Download in progress: 15.65%

Download in progress: 15.66%

Download in progress: 15.68%

Download in progress: 15.70%

Download in progress: 15.72%

Download in progress: 15.73%

Download in progress: 15.75%

Download in progress: 15.77%

Download in progress: 15.79%

Download in progress: 15.80%

Download in progress: 15.82%

Download in progress: 15.84%

Download in progress: 15.86%

Download in progress: 15.87%

Download in progress: 15.89%

Download in progress: 15.91%

Download in progress: 15.93%

Download in progress: 15.94%

Download in progress: 15.96%

Download in progress: 15.98%

Download in progress: 16.00%

Download in progress: 16.01%

Download in progress: 16.03%

Download in progress: 16.05%

Download in progress: 16.07%

Download in progress: 16.08%

Download in progress: 16.10%

Download in progress: 16.12%

Download in progress: 16.14%

Download in progress: 16.15%

Download in progress: 16.17%

Download in progress: 16.19%

Download in progress: 16.21%

Download in progress: 16.22%

Download in progress: 16.24%

Download in progress: 16.26%

Download in progress: 16.28%

Download in progress: 16.29%

Download in progress: 16.31%

Download in progress: 16.33%

Download in progress: 16.35%

Download in progress: 16.36%

Download in progress: 16.38%

Download in progress: 16.40%

Download in progress: 16.42%

Download in progress: 16.43%

Download in progress: 16.45%

Download in progress: 16.47%

Download in progress: 16.49%

Download in progress: 16.50%

Download in progress: 16.52%

Download in progress: 16.54%

Download in progress: 16.56%

Download in progress: 16.57%

Download in progress: 16.59%

Download in progress: 16.61%

Download in progress: 16.62%

Download in progress: 16.64%

Download in progress: 16.66%

Download in progress: 16.68%

Download in progress: 16.69%

Download in progress: 16.71%

Download in progress: 16.73%

Download in progress: 16.75%

Download in progress: 16.76%

Download in progress: 16.78%

Download in progress: 16.80%

Download in progress: 16.82%

Download in progress: 16.83%

Download in progress: 16.85%

Download in progress: 16.87%

Download in progress: 16.89%

Download in progress: 16.90%

Download in progress: 16.92%

Download in progress: 16.94%

Download in progress: 16.96%

Download in progress: 16.97%

Download in progress: 16.99%

Download in progress: 17.01%

Download in progress: 17.03%

Download in progress: 17.04%

Download in progress: 17.06%

Download in progress: 17.08%

Download in progress: 17.10%

Download in progress: 17.11%

Download in progress: 17.13%

Download in progress: 17.15%

Download in progress: 17.17%

Download in progress: 17.18%

Download in progress: 17.20%

Download in progress: 17.22%

Download in progress: 17.24%

Download in progress: 17.25%

Download in progress: 17.27%

Download in progress: 17.29%

Download in progress: 17.31%

Download in progress: 17.32%

Download in progress: 17.34%

Download in progress: 17.36%

Download in progress: 17.38%

Download in progress: 17.39%

Download in progress: 17.41%

Download in progress: 17.43%

Download in progress: 17.45%

Download in progress: 17.46%

Download in progress: 17.48%

Download in progress: 17.50%

Download in progress: 17.52%

Download in progress: 17.53%

Download in progress: 17.55%

Download in progress: 17.57%

Download in progress: 17.59%

Download in progress: 17.60%

Download in progress: 17.62%

Download in progress: 17.64%

Download in progress: 17.66%

Download in progress: 17.67%

Download in progress: 17.69%

Download in progress: 17.71%

Download in progress: 17.73%

Download in progress: 17.74%

Download in progress: 17.76%

Download in progress: 17.78%

Download in progress: 17.80%

Download in progress: 17.81%

Download in progress: 17.83%

Download in progress: 17.85%

Download in progress: 17.87%

Download in progress: 17.88%

Download in progress: 17.90%

Download in progress: 17.92%

Download in progress: 17.94%

Download in progress: 17.95%

Download in progress: 17.97%

Download in progress: 17.99%

Download in progress: 18.01%

Download in progress: 18.02%

Download in progress: 18.04%

Download in progress: 18.06%

Download in progress: 18.08%

Download in progress: 18.09%

Download in progress: 18.11%

Download in progress: 18.13%

Download in progress: 18.15%

Download in progress: 18.16%

Download in progress: 18.18%

Download in progress: 18.20%

Download in progress: 18.22%

Download in progress: 18.23%

Download in progress: 18.25%

Download in progress: 18.27%

Download in progress: 18.29%

Download in progress: 18.30%

Download in progress: 18.32%

Download in progress: 18.34%

Download in progress: 18.36%

Download in progress: 18.37%

Download in progress: 18.39%

Download in progress: 18.41%

Download in progress: 18.43%

Download in progress: 18.44%

Download in progress: 18.46%

Download in progress: 18.48%

Download in progress: 18.50%

Download in progress: 18.51%

Download in progress: 18.53%

Download in progress: 18.55%

Download in progress: 18.57%

Download in progress: 18.58%

Download in progress: 18.60%

Download in progress: 18.62%

Download in progress: 18.64%

Download in progress: 18.65%

Download in progress: 18.67%

Download in progress: 18.69%

Download in progress: 18.71%

Download in progress: 18.72%

Download in progress: 18.74%

Download in progress: 18.76%

Download in progress: 18.78%

Download in progress: 18.79%

Download in progress: 18.81%

Download in progress: 18.83%

Download in progress: 18.85%

Download in progress: 18.86%

Download in progress: 18.88%

Download in progress: 18.90%

Download in progress: 18.92%

Download in progress: 18.93%

Download in progress: 18.95%

Download in progress: 18.97%

Download in progress: 18.98%

Download in progress: 19.00%

Download in progress: 19.02%

Download in progress: 19.04%

Download in progress: 19.05%

Download in progress: 19.07%

Download in progress: 19.09%

Download in progress: 19.11%

Download in progress: 19.12%

Download in progress: 19.14%

Download in progress: 19.16%

Download in progress: 19.18%

Download in progress: 19.19%

Download in progress: 19.21%

Download in progress: 19.23%

Download in progress: 19.25%

Download in progress: 19.26%

Download in progress: 19.28%

Download in progress: 19.30%

Download in progress: 19.32%

Download in progress: 19.33%

Download in progress: 19.35%

Download in progress: 19.37%

Download in progress: 19.39%

Download in progress: 19.40%

Download in progress: 19.42%

Download in progress: 19.44%

Download in progress: 19.46%

Download in progress: 19.47%

Download in progress: 19.49%

Download in progress: 19.51%

Download in progress: 19.53%

Download in progress: 19.54%

Download in progress: 19.56%

Download in progress: 19.58%

Download in progress: 19.60%

Download in progress: 19.61%

Download in progress: 19.63%

Download in progress: 19.65%

Download in progress: 19.67%

Download in progress: 19.68%

Download in progress: 19.70%

Download in progress: 19.72%

Download in progress: 19.74%

Download in progress: 19.75%

Download in progress: 19.77%

Download in progress: 19.79%

Download in progress: 19.81%

Download in progress: 19.82%

Download in progress: 19.84%

Download in progress: 19.86%

Download in progress: 19.88%

Download in progress: 19.89%

Download in progress: 19.91%

Download in progress: 19.93%

Download in progress: 19.95%

Download in progress: 19.96%

Download in progress: 19.98%

Download in progress: 20.00%

Download in progress: 20.02%

Download in progress: 20.03%

Download in progress: 20.05%

Download in progress: 20.07%

Download in progress: 20.09%

Download in progress: 20.10%

Download in progress: 20.12%

Download in progress: 20.14%

Download in progress: 20.16%

Download in progress: 20.17%

Download in progress: 20.19%

Download in progress: 20.21%

Download in progress: 20.23%

Download in progress: 20.24%

Download in progress: 20.26%

Download in progress: 20.28%

Download in progress: 20.30%

Download in progress: 20.31%

Download in progress: 20.33%

Download in progress: 20.35%

Download in progress: 20.37%

Download in progress: 20.38%

Download in progress: 20.40%

Download in progress: 20.42%

Download in progress: 20.44%

Download in progress: 20.45%

Download in progress: 20.47%

Download in progress: 20.49%

Download in progress: 20.51%

Download in progress: 20.52%

Download in progress: 20.54%

Download in progress: 20.56%

Download in progress: 20.58%

Download in progress: 20.59%

Download in progress: 20.61%

Download in progress: 20.63%

Download in progress: 20.65%

Download in progress: 20.66%

Download in progress: 20.68%

Download in progress: 20.70%

Download in progress: 20.72%

Download in progress: 20.73%

Download in progress: 20.75%

Download in progress: 20.77%

Download in progress: 20.79%

Download in progress: 20.80%

Download in progress: 20.82%

Download in progress: 20.84%

Download in progress: 20.86%

Download in progress: 20.87%

Download in progress: 20.89%

Download in progress: 20.91%

Download in progress: 20.93%

Download in progress: 20.94%

Download in progress: 20.96%

Download in progress: 20.98%

Download in progress: 21.00%

Download in progress: 21.01%

Download in progress: 21.03%

Download in progress: 21.05%

Download in progress: 21.07%

Download in progress: 21.08%

Download in progress: 21.10%

Download in progress: 21.12%

Download in progress: 21.14%

Download in progress: 21.15%

Download in progress: 21.17%

Download in progress: 21.19%

Download in progress: 21.21%

Download in progress: 21.22%

Download in progress: 21.24%

Download in progress: 21.26%

Download in progress: 21.28%

Download in progress: 21.29%

Download in progress: 21.31%

Download in progress: 21.33%

Download in progress: 21.34%

Download in progress: 21.36%

Download in progress: 21.38%

Download in progress: 21.40%

Download in progress: 21.41%

Download in progress: 21.43%

Download in progress: 21.45%

Download in progress: 21.47%

Download in progress: 21.48%

Download in progress: 21.50%

Download in progress: 21.52%

Download in progress: 21.54%

Download in progress: 21.55%

Download in progress: 21.57%

Download in progress: 21.59%

Download in progress: 21.61%

Download in progress: 21.62%

Download in progress: 21.64%

Download in progress: 21.66%

Download in progress: 21.68%

Download in progress: 21.69%

Download in progress: 21.71%

Download in progress: 21.73%

Download in progress: 21.75%

Download in progress: 21.76%

Download in progress: 21.78%

Download in progress: 21.80%

Download in progress: 21.82%

Download in progress: 21.83%

Download in progress: 21.85%

Download in progress: 21.87%

Download in progress: 21.89%

Download in progress: 21.90%

Download in progress: 21.92%

Download in progress: 21.94%

Download in progress: 21.96%

Download in progress: 21.97%

Download in progress: 21.99%

Download in progress: 22.01%

Download in progress: 22.03%

Download in progress: 22.04%

Download in progress: 22.06%

Download in progress: 22.08%

Download in progress: 22.10%

Download in progress: 22.11%

Download in progress: 22.13%

Download in progress: 22.15%

Download in progress: 22.17%

Download in progress: 22.18%

Download in progress: 22.20%

Download in progress: 22.22%

Download in progress: 22.24%

Download in progress: 22.25%

Download in progress: 22.27%

Download in progress: 22.29%

Download in progress: 22.31%

Download in progress: 22.32%

Download in progress: 22.34%

Download in progress: 22.36%

Download in progress: 22.38%

Download in progress: 22.39%

Download in progress: 22.41%

Download in progress: 22.43%

Download in progress: 22.45%

Download in progress: 22.46%

Download in progress: 22.48%

Download in progress: 22.50%

Download in progress: 22.52%

Download in progress: 22.53%

Download in progress: 22.55%

Download in progress: 22.57%

Download in progress: 22.59%

Download in progress: 22.60%

Download in progress: 22.62%

Download in progress: 22.64%

Download in progress: 22.66%

Download in progress: 22.67%

Download in progress: 22.69%

Download in progress: 22.71%

Download in progress: 22.73%

Download in progress: 22.74%

Download in progress: 22.76%

Download in progress: 22.78%

Download in progress: 22.80%

Download in progress: 22.81%

Download in progress: 22.83%

Download in progress: 22.85%

Download in progress: 22.87%

Download in progress: 22.88%

Download in progress: 22.90%

Download in progress: 22.92%

Download in progress: 22.94%

Download in progress: 22.95%

Download in progress: 22.97%

Download in progress: 22.99%

Download in progress: 23.01%

Download in progress: 23.02%

Download in progress: 23.04%

Download in progress: 23.06%

Download in progress: 23.08%

Download in progress: 23.09%

Download in progress: 23.11%

Download in progress: 23.13%

Download in progress: 23.15%

Download in progress: 23.16%

Download in progress: 23.18%

Download in progress: 23.20%

Download in progress: 23.22%

Download in progress: 23.23%

Download in progress: 23.25%

Download in progress: 23.27%

Download in progress: 23.29%

Download in progress: 23.30%

Download in progress: 23.32%

Download in progress: 23.34%

Download in progress: 23.36%

Download in progress: 23.37%

Download in progress: 23.39%

Download in progress: 23.41%

Download in progress: 23.43%

Download in progress: 23.44%

Download in progress: 23.46%

Download in progress: 23.48%

Download in progress: 23.50%

Download in progress: 23.51%

Download in progress: 23.53%

Download in progress: 23.55%

Download in progress: 23.57%

Download in progress: 23.58%

Download in progress: 23.60%

Download in progress: 23.62%

Download in progress: 23.64%

Download in progress: 23.65%

Download in progress: 23.67%

Download in progress: 23.69%

Download in progress: 23.70%

Download in progress: 23.72%

Download in progress: 23.74%

Download in progress: 23.76%

Download in progress: 23.77%

Download in progress: 23.79%

Download in progress: 23.81%

Download in progress: 23.83%

Download in progress: 23.84%

Download in progress: 23.86%

Download in progress: 23.88%

Download in progress: 23.90%

Download in progress: 23.91%

Download in progress: 23.93%

Download in progress: 23.95%

Download in progress: 23.97%

Download in progress: 23.98%

Download in progress: 24.00%

Download in progress: 24.02%

Download in progress: 24.04%

Download in progress: 24.05%

Download in progress: 24.07%

Download in progress: 24.09%

Download in progress: 24.11%

Download in progress: 24.12%

Download in progress: 24.14%

Download in progress: 24.16%

Download in progress: 24.18%

Download in progress: 24.19%

Download in progress: 24.21%

Download in progress: 24.23%

Download in progress: 24.25%

Download in progress: 24.26%

Download in progress: 24.28%

Download in progress: 24.30%

Download in progress: 24.32%

Download in progress: 24.33%

Download in progress: 24.35%

Download in progress: 24.37%

Download in progress: 24.39%

Download in progress: 24.40%

Download in progress: 24.42%

Download in progress: 24.44%

Download in progress: 24.46%

Download in progress: 24.47%

Download in progress: 24.49%

Download in progress: 24.51%

Download in progress: 24.53%

Download in progress: 24.54%

Download in progress: 24.56%

Download in progress: 24.58%

Download in progress: 24.60%

Download in progress: 24.61%

Download in progress: 24.63%

Download in progress: 24.65%

Download in progress: 24.67%

Download in progress: 24.68%

Download in progress: 24.70%

Download in progress: 24.72%

Download in progress: 24.74%

Download in progress: 24.75%

Download in progress: 24.77%

Download in progress: 24.79%

Download in progress: 24.81%

Download in progress: 24.82%

Download in progress: 24.84%

Download in progress: 24.86%

Download in progress: 24.88%

Download in progress: 24.89%

Download in progress: 24.91%

Download in progress: 24.93%

Download in progress: 24.95%

Download in progress: 24.96%

Download in progress: 24.98%

Download in progress: 25.00%

Download in progress: 25.02%

Download in progress: 25.03%

Download in progress: 25.05%

Download in progress: 25.07%

Download in progress: 25.09%

Download in progress: 25.10%

Download in progress: 25.12%

Download in progress: 25.14%

Download in progress: 25.16%

Download in progress: 25.17%

Download in progress: 25.19%

Download in progress: 25.21%

Download in progress: 25.23%

Download in progress: 25.24%

Download in progress: 25.26%

Download in progress: 25.28%

Download in progress: 25.30%

Download in progress: 25.31%

Download in progress: 25.33%

Download in progress: 25.35%

Download in progress: 25.37%

Download in progress: 25.38%

Download in progress: 25.40%

Download in progress: 25.42%

Download in progress: 25.44%

Download in progress: 25.45%

Download in progress: 25.47%

Download in progress: 25.49%

Download in progress: 25.51%

Download in progress: 25.52%

Download in progress: 25.54%

Download in progress: 25.56%

Download in progress: 25.58%

Download in progress: 25.59%

Download in progress: 25.61%

Download in progress: 25.63%

Download in progress: 25.65%

Download in progress: 25.66%

Download in progress: 25.68%

Download in progress: 25.70%

Download in progress: 25.72%

Download in progress: 25.73%

Download in progress: 25.75%

Download in progress: 25.77%

Download in progress: 25.79%

Download in progress: 25.80%

Download in progress: 25.82%

Download in progress: 25.84%

Download in progress: 25.86%

Download in progress: 25.87%

Download in progress: 25.89%

Download in progress: 25.91%

Download in progress: 25.93%

Download in progress: 25.94%

Download in progress: 25.96%

Download in progress: 25.98%

Download in progress: 26.00%

Download in progress: 26.01%

Download in progress: 26.03%

Download in progress: 26.05%

Download in progress: 26.06%

Download in progress: 26.08%

Download in progress: 26.10%

Download in progress: 26.12%

Download in progress: 26.13%

Download in progress: 26.15%

Download in progress: 26.17%

Download in progress: 26.19%

Download in progress: 26.20%

Download in progress: 26.22%

Download in progress: 26.24%

Download in progress: 26.26%

Download in progress: 26.27%

Download in progress: 26.29%

Download in progress: 26.31%

Download in progress: 26.33%

Download in progress: 26.34%

Download in progress: 26.36%

Download in progress: 26.38%

Download in progress: 26.40%

Download in progress: 26.41%

Download in progress: 26.43%

Download in progress: 26.45%

Download in progress: 26.47%

Download in progress: 26.48%

Download in progress: 26.50%

Download in progress: 26.52%

Download in progress: 26.54%

Download in progress: 26.55%

Download in progress: 26.57%

Download in progress: 26.59%

Download in progress: 26.61%

Download in progress: 26.62%

Download in progress: 26.64%

Download in progress: 26.66%

Download in progress: 26.68%

Download in progress: 26.69%

Download in progress: 26.71%

Download in progress: 26.73%

Download in progress: 26.75%

Download in progress: 26.76%

Download in progress: 26.78%

Download in progress: 26.80%

Download in progress: 26.82%

Download in progress: 26.83%

Download in progress: 26.85%

Download in progress: 26.87%

Download in progress: 26.89%

Download in progress: 26.90%

Download in progress: 26.92%

Download in progress: 26.94%

Download in progress: 26.96%

Download in progress: 26.97%

Download in progress: 26.99%

Download in progress: 27.01%

Download in progress: 27.03%

Download in progress: 27.04%

Download in progress: 27.06%

Download in progress: 27.08%

Download in progress: 27.10%

Download in progress: 27.11%

Download in progress: 27.13%

Download in progress: 27.15%

Download in progress: 27.17%

Download in progress: 27.18%

Download in progress: 27.20%

Download in progress: 27.22%

Download in progress: 27.24%

Download in progress: 27.25%

Download in progress: 27.27%

Download in progress: 27.29%

Download in progress: 27.31%

Download in progress: 27.32%

Download in progress: 27.34%

Download in progress: 27.36%

Download in progress: 27.38%

Download in progress: 27.39%

Download in progress: 27.41%

Download in progress: 27.43%

Download in progress: 27.45%

Download in progress: 27.46%

Download in progress: 27.48%

Download in progress: 27.50%

Download in progress: 27.52%

Download in progress: 27.53%

Download in progress: 27.55%

Download in progress: 27.57%

Download in progress: 27.59%

Download in progress: 27.60%

Download in progress: 27.62%

Download in progress: 27.64%

Download in progress: 27.66%

Download in progress: 27.67%

Download in progress: 27.69%

Download in progress: 27.71%

Download in progress: 27.73%

Download in progress: 27.74%

Download in progress: 27.76%

Download in progress: 27.78%

Download in progress: 27.80%

Download in progress: 27.81%

Download in progress: 27.83%

Download in progress: 27.85%

Download in progress: 27.87%

Download in progress: 27.88%

Download in progress: 27.90%

Download in progress: 27.92%

Download in progress: 27.94%

Download in progress: 27.95%

Download in progress: 27.97%

Download in progress: 27.99%

Download in progress: 28.01%

Download in progress: 28.02%

Download in progress: 28.04%

Download in progress: 28.06%

Download in progress: 28.08%

Download in progress: 28.09%

Download in progress: 28.11%

Download in progress: 28.13%

Download in progress: 28.15%

Download in progress: 28.16%

Download in progress: 28.18%

Download in progress: 28.20%

Download in progress: 28.22%

Download in progress: 28.23%

Download in progress: 28.25%

Download in progress: 28.27%

Download in progress: 28.29%

Download in progress: 28.30%

Download in progress: 28.32%

Download in progress: 28.34%

Download in progress: 28.36%

Download in progress: 28.37%

Download in progress: 28.39%

Download in progress: 28.41%

Download in progress: 28.42%

Download in progress: 28.44%

Download in progress: 28.46%

Download in progress: 28.48%

Download in progress: 28.49%

Download in progress: 28.51%

Download in progress: 28.53%

Download in progress: 28.55%

Download in progress: 28.56%

Download in progress: 28.58%

Download in progress: 28.60%

Download in progress: 28.62%

Download in progress: 28.63%

Download in progress: 28.65%

Download in progress: 28.67%

Download in progress: 28.69%

Download in progress: 28.70%

Download in progress: 28.72%

Download in progress: 28.74%

Download in progress: 28.76%

Download in progress: 28.77%

Download in progress: 28.79%

Download in progress: 28.81%

Download in progress: 28.83%

Download in progress: 28.84%

Download in progress: 28.86%

Download in progress: 28.88%

Download in progress: 28.90%

Download in progress: 28.91%

Download in progress: 28.93%

Download in progress: 28.95%

Download in progress: 28.97%

Download in progress: 28.98%

Download in progress: 29.00%

Download in progress: 29.02%

Download in progress: 29.04%

Download in progress: 29.05%

Download in progress: 29.07%

Download in progress: 29.09%

Download in progress: 29.11%

Download in progress: 29.12%

Download in progress: 29.14%

Download in progress: 29.16%

Download in progress: 29.18%

Download in progress: 29.19%

Download in progress: 29.21%

Download in progress: 29.23%

Download in progress: 29.25%

Download in progress: 29.26%

Download in progress: 29.28%

Download in progress: 29.30%

Download in progress: 29.32%

Download in progress: 29.33%

Download in progress: 29.35%

Download in progress: 29.37%

Download in progress: 29.39%

Download in progress: 29.40%

Download in progress: 29.42%

Download in progress: 29.44%

Download in progress: 29.46%

Download in progress: 29.47%

Download in progress: 29.49%

Download in progress: 29.51%

Download in progress: 29.53%

Download in progress: 29.54%

Download in progress: 29.56%

Download in progress: 29.58%

Download in progress: 29.60%

Download in progress: 29.61%

Download in progress: 29.63%

Download in progress: 29.65%

Download in progress: 29.67%

Download in progress: 29.68%

Download in progress: 29.70%

Download in progress: 29.72%

Download in progress: 29.74%

Download in progress: 29.75%

Download in progress: 29.77%

Download in progress: 29.79%

Download in progress: 29.81%

Download in progress: 29.82%

Download in progress: 29.84%

Download in progress: 29.86%

Download in progress: 29.88%

Download in progress: 29.89%

Download in progress: 29.91%

Download in progress: 29.93%

Download in progress: 29.95%

Download in progress: 29.96%

Download in progress: 29.98%

Download in progress: 30.00%

Download in progress: 30.02%

Download in progress: 30.03%

Download in progress: 30.05%

Download in progress: 30.07%

Download in progress: 30.09%

Download in progress: 30.10%

Download in progress: 30.12%

Download in progress: 30.14%

Download in progress: 30.16%

Download in progress: 30.17%

Download in progress: 30.19%

Download in progress: 30.21%

Download in progress: 30.23%

Download in progress: 30.24%

Download in progress: 30.26%

Download in progress: 30.28%

Download in progress: 30.30%

Download in progress: 30.31%

Download in progress: 30.33%

Download in progress: 30.35%

Download in progress: 30.37%

Download in progress: 30.38%

Download in progress: 30.40%

Download in progress: 30.42%

Download in progress: 30.44%

Download in progress: 30.45%

Download in progress: 30.47%

Download in progress: 30.49%

Download in progress: 30.51%

Download in progress: 30.52%

Download in progress: 30.54%

Download in progress: 30.56%

Download in progress: 30.58%

Download in progress: 30.59%

Download in progress: 30.61%

Download in progress: 30.63%

Download in progress: 30.65%

Download in progress: 30.66%

Download in progress: 30.68%

Download in progress: 30.70%

Download in progress: 30.72%

Download in progress: 30.73%

Download in progress: 30.75%

Download in progress: 30.77%

Download in progress: 30.78%

Download in progress: 30.80%

Download in progress: 30.82%

Download in progress: 30.84%

Download in progress: 30.85%

Download in progress: 30.87%

Download in progress: 30.89%

Download in progress: 30.91%

Download in progress: 30.92%

Download in progress: 30.94%

Download in progress: 30.96%

Download in progress: 30.98%

Download in progress: 30.99%

Download in progress: 31.01%

Download in progress: 31.03%

Download in progress: 31.05%

Download in progress: 31.06%

Download in progress: 31.08%

Download in progress: 31.10%

Download in progress: 31.12%

Download in progress: 31.13%

Download in progress: 31.15%

Download in progress: 31.17%

Download in progress: 31.19%

Download in progress: 31.20%

Download in progress: 31.22%

Download in progress: 31.24%

Download in progress: 31.26%

Download in progress: 31.27%

Download in progress: 31.29%

Download in progress: 31.31%

Download in progress: 31.33%

Download in progress: 31.34%

Download in progress: 31.36%

Download in progress: 31.38%

Download in progress: 31.40%

Download in progress: 31.41%

Download in progress: 31.43%

Download in progress: 31.45%

Download in progress: 31.47%

Download in progress: 31.48%

Download in progress: 31.50%

Download in progress: 31.52%

Download in progress: 31.54%

Download in progress: 31.55%

Download in progress: 31.57%

Download in progress: 31.59%

Download in progress: 31.61%

Download in progress: 31.62%

Download in progress: 31.64%

Download in progress: 31.66%

Download in progress: 31.68%

Download in progress: 31.69%

Download in progress: 31.71%

Download in progress: 31.73%

Download in progress: 31.75%

Download in progress: 31.76%

Download in progress: 31.78%

Download in progress: 31.80%

Download in progress: 31.82%

Download in progress: 31.83%

Download in progress: 31.85%

Download in progress: 31.87%

Download in progress: 31.89%

Download in progress: 31.90%

Download in progress: 31.92%

Download in progress: 31.94%

Download in progress: 31.96%

Download in progress: 31.97%

Download in progress: 31.99%

Download in progress: 32.01%

Download in progress: 32.03%

Download in progress: 32.04%

Download in progress: 32.06%

Download in progress: 32.08%

Download in progress: 32.10%

Download in progress: 32.11%

Download in progress: 32.13%

Download in progress: 32.15%

Download in progress: 32.17%

Download in progress: 32.18%

Download in progress: 32.20%

Download in progress: 32.22%

Download in progress: 32.24%

Download in progress: 32.25%

Download in progress: 32.27%

Download in progress: 32.29%

Download in progress: 32.31%

Download in progress: 32.32%

Download in progress: 32.34%

Download in progress: 32.36%

Download in progress: 32.38%

Download in progress: 32.39%

Download in progress: 32.41%

Download in progress: 32.43%

Download in progress: 32.45%

Download in progress: 32.46%

Download in progress: 32.48%

Download in progress: 32.50%

Download in progress: 32.52%

Download in progress: 32.53%

Download in progress: 32.55%

Download in progress: 32.57%

Download in progress: 32.59%

Download in progress: 32.60%

Download in progress: 32.62%

Download in progress: 32.64%

Download in progress: 32.66%

Download in progress: 32.67%

Download in progress: 32.69%

Download in progress: 32.71%

Download in progress: 32.73%

Download in progress: 32.74%

Download in progress: 32.76%

Download in progress: 32.78%

Download in progress: 32.80%

Download in progress: 32.81%

Download in progress: 32.83%

Download in progress: 32.85%

Download in progress: 32.87%

Download in progress: 32.88%

Download in progress: 32.90%

Download in progress: 32.92%

Download in progress: 32.94%

Download in progress: 32.95%

Download in progress: 32.97%

Download in progress: 32.99%

Download in progress: 33.01%

Download in progress: 33.02%

Download in progress: 33.04%

Download in progress: 33.06%

Download in progress: 33.08%

Download in progress: 33.09%

Download in progress: 33.11%

Download in progress: 33.13%

Download in progress: 33.14%

Download in progress: 33.16%

Download in progress: 33.18%

Download in progress: 33.20%

Download in progress: 33.21%

Download in progress: 33.23%

Download in progress: 33.25%

Download in progress: 33.27%

Download in progress: 33.28%

Download in progress: 33.30%

Download in progress: 33.32%

Download in progress: 33.34%

Download in progress: 33.35%

Download in progress: 33.37%

Download in progress: 33.39%

Download in progress: 33.41%

Download in progress: 33.42%

Download in progress: 33.44%

Download in progress: 33.46%

Download in progress: 33.48%

Download in progress: 33.49%

Download in progress: 33.51%

Download in progress: 33.53%

Download in progress: 33.55%

Download in progress: 33.56%

Download in progress: 33.58%

Download in progress: 33.60%

Download in progress: 33.62%

Download in progress: 33.63%

Download in progress: 33.65%

Download in progress: 33.67%

Download in progress: 33.69%

Download in progress: 33.70%

Download in progress: 33.72%

Download in progress: 33.74%

Download in progress: 33.76%

Download in progress: 33.77%

Download in progress: 33.79%

Download in progress: 33.81%

Download in progress: 33.83%

Download in progress: 33.84%

Download in progress: 33.86%

Download in progress: 33.88%

Download in progress: 33.90%

Download in progress: 33.91%

Download in progress: 33.93%

Download in progress: 33.95%

Download in progress: 33.97%

Download in progress: 33.98%

Download in progress: 34.00%

Download in progress: 34.02%

Download in progress: 34.04%

Download in progress: 34.05%

Download in progress: 34.07%

Download in progress: 34.09%

Download in progress: 34.11%

Download in progress: 34.12%

Download in progress: 34.14%

Download in progress: 34.16%

Download in progress: 34.18%

Download in progress: 34.19%

Download in progress: 34.21%

Download in progress: 34.23%

Download in progress: 34.25%

Download in progress: 34.26%

Download in progress: 34.28%

Download in progress: 34.30%

Download in progress: 34.32%

Download in progress: 34.33%

Download in progress: 34.35%

Download in progress: 34.37%

Download in progress: 34.39%

Download in progress: 34.40%

Download in progress: 34.42%

Download in progress: 34.44%

Download in progress: 34.46%

Download in progress: 34.47%

Download in progress: 34.49%

Download in progress: 34.51%

Download in progress: 34.53%

Download in progress: 34.54%

Download in progress: 34.56%

Download in progress: 34.58%

Download in progress: 34.60%

Download in progress: 34.61%

Download in progress: 34.63%

Download in progress: 34.65%

Download in progress: 34.67%

Download in progress: 34.68%

Download in progress: 34.70%

Download in progress: 34.72%

Download in progress: 34.74%

Download in progress: 34.75%

Download in progress: 34.77%

Download in progress: 34.79%

Download in progress: 34.81%

Download in progress: 34.82%

Download in progress: 34.84%

Download in progress: 34.86%

Download in progress: 34.88%

Download in progress: 34.89%

Download in progress: 34.91%

Download in progress: 34.93%

Download in progress: 34.95%

Download in progress: 34.96%

Download in progress: 34.98%

Download in progress: 35.00%

Download in progress: 35.02%

Download in progress: 35.03%

Download in progress: 35.05%

Download in progress: 35.07%

Download in progress: 35.09%

Download in progress: 35.10%

Download in progress: 35.12%

Download in progress: 35.14%

Download in progress: 35.16%

Download in progress: 35.17%

Download in progress: 35.19%

Download in progress: 35.21%

Download in progress: 35.23%

Download in progress: 35.24%

Download in progress: 35.26%

Download in progress: 35.28%

Download in progress: 35.30%

Download in progress: 35.31%

Download in progress: 35.33%

Download in progress: 35.35%

Download in progress: 35.37%

Download in progress: 35.38%

Download in progress: 35.40%

Download in progress: 35.42%

Download in progress: 35.44%

Download in progress: 35.45%

Download in progress: 35.47%

Download in progress: 35.49%

Download in progress: 35.50%

Download in progress: 35.52%

Download in progress: 35.54%

Download in progress: 35.56%

Download in progress: 35.57%

Download in progress: 35.59%

Download in progress: 35.61%

Download in progress: 35.63%

Download in progress: 35.64%

Download in progress: 35.66%

Download in progress: 35.68%

Download in progress: 35.70%

Download in progress: 35.71%

Download in progress: 35.73%

Download in progress: 35.75%

Download in progress: 35.77%

Download in progress: 35.78%

Download in progress: 35.80%

Download in progress: 35.82%

Download in progress: 35.84%

Download in progress: 35.85%

Download in progress: 35.87%

Download in progress: 35.89%

Download in progress: 35.91%

Download in progress: 35.92%

Download in progress: 35.94%

Download in progress: 35.96%

Download in progress: 35.98%

Download in progress: 35.99%

Download in progress: 36.01%

Download in progress: 36.03%

Download in progress: 36.05%

Download in progress: 36.06%

Download in progress: 36.08%

Download in progress: 36.10%

Download in progress: 36.12%

Download in progress: 36.13%

Download in progress: 36.15%

Download in progress: 36.17%

Download in progress: 36.19%

Download in progress: 36.20%

Download in progress: 36.22%

Download in progress: 36.24%

Download in progress: 36.26%

Download in progress: 36.27%

Download in progress: 36.29%

Download in progress: 36.31%

Download in progress: 36.33%

Download in progress: 36.34%

Download in progress: 36.36%

Download in progress: 36.38%

Download in progress: 36.40%

Download in progress: 36.41%

Download in progress: 36.43%

Download in progress: 36.45%

Download in progress: 36.47%

Download in progress: 36.48%

Download in progress: 36.50%

Download in progress: 36.52%

Download in progress: 36.54%

Download in progress: 36.55%

Download in progress: 36.57%

Download in progress: 36.59%

Download in progress: 36.61%

Download in progress: 36.62%

Download in progress: 36.64%

Download in progress: 36.66%

Download in progress: 36.68%

Download in progress: 36.69%

Download in progress: 36.71%

Download in progress: 36.73%

Download in progress: 36.75%

Download in progress: 36.76%

Download in progress: 36.78%

Download in progress: 36.80%

Download in progress: 36.82%

Download in progress: 36.83%

Download in progress: 36.85%

Download in progress: 36.87%

Download in progress: 36.89%

Download in progress: 36.90%

Download in progress: 36.92%

Download in progress: 36.94%

Download in progress: 36.96%

Download in progress: 36.97%

Download in progress: 36.99%

Download in progress: 37.01%

Download in progress: 37.03%

Download in progress: 37.04%

Download in progress: 37.06%

Download in progress: 37.08%

Download in progress: 37.10%

Download in progress: 37.11%

Download in progress: 37.13%

Download in progress: 37.15%

Download in progress: 37.17%

Download in progress: 37.18%

Download in progress: 37.20%

Download in progress: 37.22%

Download in progress: 37.24%

Download in progress: 37.25%

Download in progress: 37.27%

Download in progress: 37.29%

Download in progress: 37.31%

Download in progress: 37.32%

Download in progress: 37.34%

Download in progress: 37.36%

Download in progress: 37.38%

Download in progress: 37.39%

Download in progress: 37.41%

Download in progress: 37.43%

Download in progress: 37.45%

Download in progress: 37.46%

Download in progress: 37.48%

Download in progress: 37.50%

Download in progress: 37.52%

Download in progress: 37.53%

Download in progress: 37.55%

Download in progress: 37.57%

Download in progress: 37.59%

Download in progress: 37.60%

Download in progress: 37.62%

Download in progress: 37.64%

Download in progress: 37.66%

Download in progress: 37.67%

Download in progress: 37.69%

Download in progress: 37.71%

Download in progress: 37.73%

Download in progress: 37.74%

Download in progress: 37.76%

Download in progress: 37.78%

Download in progress: 37.80%

Download in progress: 37.81%

Download in progress: 37.83%

Download in progress: 37.85%

Download in progress: 37.86%

Download in progress: 37.88%

Download in progress: 37.90%

Download in progress: 37.92%

Download in progress: 37.93%

Download in progress: 37.95%

Download in progress: 37.97%

Download in progress: 37.99%

Download in progress: 38.00%

Download in progress: 38.02%

Download in progress: 38.04%

Download in progress: 38.06%

Download in progress: 38.07%

Download in progress: 38.09%

Download in progress: 38.11%

Download in progress: 38.13%

Download in progress: 38.14%

Download in progress: 38.16%

Download in progress: 38.18%

Download in progress: 38.20%

Download in progress: 38.21%

Download in progress: 38.23%

Download in progress: 38.25%

Download in progress: 38.27%

Download in progress: 38.28%

Download in progress: 38.30%

Download in progress: 38.32%

Download in progress: 38.34%

Download in progress: 38.35%

Download in progress: 38.37%

Download in progress: 38.39%

Download in progress: 38.41%

Download in progress: 38.42%

Download in progress: 38.44%

Download in progress: 38.46%

Download in progress: 38.48%

Download in progress: 38.49%

Download in progress: 38.51%

Download in progress: 38.53%

Download in progress: 38.55%

Download in progress: 38.56%

Download in progress: 38.58%

Download in progress: 38.60%

Download in progress: 38.62%

Download in progress: 38.63%

Download in progress: 38.65%

Download in progress: 38.67%

Download in progress: 38.69%

Download in progress: 38.70%

Download in progress: 38.72%

Download in progress: 38.74%

Download in progress: 38.76%

Download in progress: 38.77%

Download in progress: 38.79%

Download in progress: 38.81%

Download in progress: 38.83%

Download in progress: 38.84%

Download in progress: 38.86%

Download in progress: 38.88%

Download in progress: 38.90%

Download in progress: 38.91%

Download in progress: 38.93%

Download in progress: 38.95%

Download in progress: 38.97%

Download in progress: 38.98%

Download in progress: 39.00%

Download in progress: 39.02%

Download in progress: 39.04%

Download in progress: 39.05%

Download in progress: 39.07%

Download in progress: 39.09%

Download in progress: 39.11%